Why Most AI Implementations Fail (And What You Can Do Differently)

What happens when good leaders make bad AI decisions and the framework to avoid joining them

Let’s stay connected—find me on LinkedIn and Medium to further the conversation.

Have you ever seen someone buy a professional-grade bungee cord and, while completely inexperienced, design their own setup like they saw in a movie and jump off a bridge, trusting their skills just because they purchased quality equipment?

Of course not. Even the most confident person understands that buying professional gear doesn't make them a professional. They recognize their lack of expertise and refuse to implement without proper training. The cost is simply too high.

Yet this is exactly what happens when good leaders rush into AI integration.

They purchase professional-grade AI tools, design their own implementation strategy based on what they've seen others do, and leap into organizational change, trusting that good intentions and expensive software will catch them. They skip the guardrails, bypass team training, and assume their leadership experience will be enough.

The bungee cord might be professional grade, but you're still falling if you don't know how to set up the jump.

AI integration isn't like adopting new software or restructuring a department. It's like introducing a powerful force into your organization that can either amplify your mission or destroy the trust you've spent years building. The tools are sophisticated and expensive, but without proper expertise in implementation, you're essentially buying professional equipment and designing your own setup while hoping for the best.

I've watched mission-driven leaders with decades of experience make decisions that would devastate their teams, waste precious resources, and derail their most important work. Not because they lacked wisdom, but because they treated AI implementation like any other operational change instead of recognizing that it requires specialized knowledge.

This is why I reached out to Jake Handy, creator of Handy AI to share his insights and expertise on this topic. Together, we've identified the most common AI integration disasters that blindside good leaders and the preventable framework that protects both your mission and your people.

Here’s Jake…

42% of AI projects are abandoned before they reach production, and that failure rate has more than doubled from 17% just last year. These statistics are alarming but leave out the stories of the wreckage that these failures leave behind.

What I've learned from helping folks recover from “AI-gone-wrong” is that the failures aren't random acts. They follow predictable patterns, and they're almost always the result of poor planning (disguised as bold leadership).

The truth is, most AI failures aren't caused by the technology being too complex or immature. They're caused by leaders who treat AI like any other software purchase when it's a fundamentally different beast.

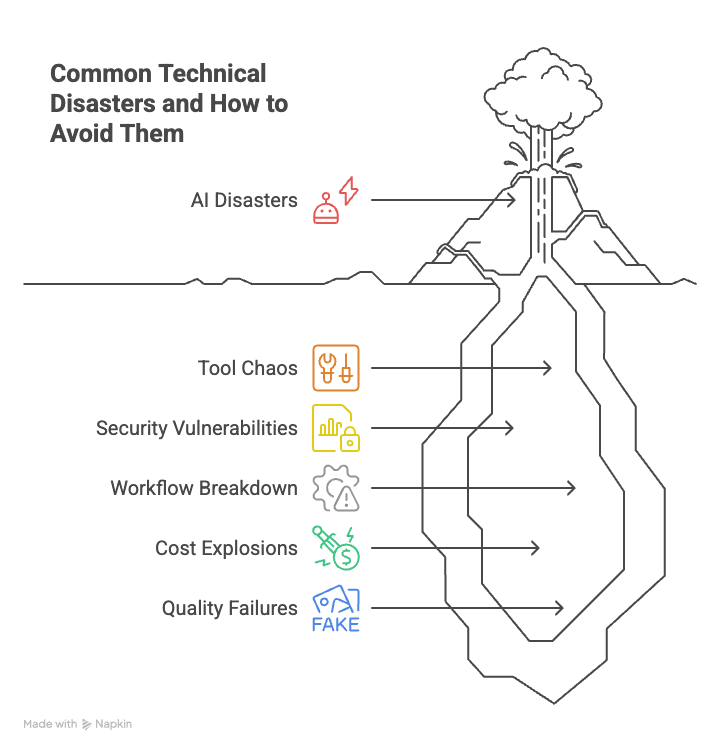

Here are the five most common technical disasters I see, and more importantly, what you can do to avoid them.

1. Tool chaos: When AI becomes a digital Tower of Babel

The first disaster I encountered is what I call "tool chaos", when organizations adopt multiple AI platforms without any integration strategy. It happens when different departments see AI demonstrations, get excited, and each procures its own solution.

Marketing gets a content generation tool. Sales implements a different conversational AI. HR adopts its own screening algorithm. Finance brings in forecasting AI. IT isn't involved in any of these decisions.

Within six months, you have four or five AI systems that can't talk to each other, each with its own data requirements, security protocols, and user interfaces. Employee productivity can actually decrease because your staff will be spending more time switching between incompatible systems than they ever did on manual processes. Your customer information is scattered across platforms, creating incomplete pictures and conflicting insights. Team members start making decisions based on different AI outputs, leading to inconsistent customer experiences and internal conflicts.

🚨 How to avoid it: Establish an AI governance committee, or at least a plan, before anyone purchases anything. Create a unified data strategy that defines how information flows between systems. If you already have multiple tools, have your IT folks conduct an integration audit and consolidate ruthlessly.

2. Security vulnerabilities: The hidden catastrophe waiting to happen

Research from cybersecurity firm Wiz found that they could discover exposed AI databases "within minutes" of starting their assessment. Even basic misconfigurations that leave sensitive data completely exposed.

Organizations can very easily implement AI chatbots that inadvertently store customer conversations containing Social Security numbers, medical information, and financial details in publicly accessible databases. Studies show that nearly half of AI-generated code contains exploitable bugs, and many organizations are using AI to write their security protocols. You're essentially asking a system that doesn't understand security implications to design its own prison.

🚨 How to avoid it: Never deploy AI systems without a comprehensive security audit. Implement zero-trust architecture for all AI tools. Regularly scan for exposed databases and misconfigured access controls. Most importantly, never use AI-generated code for security-critical functions without human expert review.

3. Workflow breakdown: When AI destroys the work it was meant to improve

Here's a disaster that sounds counterintuitive: AI that works exactly as designed but completely breaks your operational workflow.

Customer service departments may implement AI chatbots that handle 80% of inquiries perfectly, but the remaining 20% (the complex cases that actually require human expertise) now take three times longer to resolve. Why? Because the AI stripped away all the context and institutional knowledge that experienced staff used to triage issues effectively.

The AI optimizes for its specific function while ignoring the broader operational ecosystem it's embedded in. Research from RAND Corporation shows this is one of the primary reasons AI projects fail in production; they work in isolation but break when integrated into real work environments.

🚨 How to avoid it: Map your complete workflow before implementing AI. Identify every handoff point, dependency, and integration requirement. Test the AI systems with your actual data and real use cases, not just demo scenarios. Most importantly, involve the people who will use the system in the design process from day one.

4. Cost explosions: When AI budgets become runaway trains

The fourth disaster is financial and more common than you might think. Organizations budget for the AI software but completely underestimate the infrastructure, training, and ongoing operational costs.

Let’s say you’re helping a modest healthcare organization implement an AI diagnostic tool. The software license is $50,000 annually, which sounds reasonable at the time. But getting it to work requires upgrading your entire network infrastructure ($200,000), training your staff ($75,000), ongoing data cleaning and preparation ($150,000 annually), and hiring additional IT support ($120,000 in salaries). That $50,000 AI solution actually costs over $400,000 in the first year.

This kind of inflation can happen because AI systems are often data-hungry and compute-intensive. They require clean, structured data (which you probably don't have), significant processing power (which you probably can't support), and specialized expertise (which you probably need to hire). Up to 80% of AI project time is spent on data preparation, not the exciting AI work leaders envision.

🚨 How to avoid it: Calculate total cost of ownership, not just licensing fees. Include data preparation, infrastructure upgrades, training, and ongoing maintenance in your budget. Plan for costs to be 3-5x your initial estimate, especially in the first year.

5. Quality failures: The ghost in the AI machine

The final disaster is the most insidious because it's not immediately obvious. AI systems that produce outputs that are good enough to use but wrong enough to cause problems.

I've seen AI translation tools that maintain perfect grammar and structure while completely missing cultural context, possibly leading to marketing campaigns that are technically correct but culturally offensive. I've seen AI algorithms that eliminate bias in individual decisions while systematically excluding entire demographic groups from consideration.

The danger is in having an AI system that is confidently wrong. AI systems fail safety tests at alarming rates, with some models responding to harmful prompts more often than not while appearing to function normally for routine requests.

Organizations can develop dangerous blind spots because they stop questioning AI outputs. When the system has been right 90% of the time for six months, teams stop fact-checking the recommendations. This is when the 10% error rate starts causing real damage: wrong medical diagnoses, incorrect financial projections, or flawed strategic recommendations.

🚨 How to avoid it: Implement ongoing quality monitoring, not just initial testing. Create feedback loops that capture errors and near-misses. Train teams to maintain healthy skepticism of AI outputs. Most importantly, never fully automate decisions that could cause significant harm without human oversight.

The bottom line

These disasters aren't inevitable. They're predictable consequences of treating AI implementation like a simple technology upgrade instead of a serious organizational expansion.

The leaders who succeed with AI won’t rush into implementation. They’ll take time to understand their current systems, map their workflows, audit their security posture, and prepare their teams. They’ll recognize that buying professional-grade AI tools doesn't make them AI professionals, but it does make them responsible for learning how to use those tools safely and effectively.

From everything I’ve seen, the organizations that avoid these disasters share three core characteristics: they prioritize security from day one, they implement AI systematically rather than piecemeal, and they invest as much in change management as they do in technology.

Go out there and get it done right.

If You Only Read This... (TL;DR)

42% of AI projects fail (doubled from 17% last year) because leaders treat AI like regular software instead of the organizational force it actually is

"Tool Chaos" disaster: Departments buy separate AI tools, creating incompatible systems that decrease productivity and fragment data

Hidden security time bomb: AI databases get exposed "within minutes" and nearly half of AI-generated code contains exploitable security bugs

The 80/20 paradox: AI handles 80% of tasks perfectly, but makes the remaining 20% take 3x longer by stripping away human context

Success formula: Prioritize security from day one, implement systematically (not piecemeal), and invest as much in change management as technology

Bottom line: AI isn't a software purchase - it's professional-grade equipment requiring professional-grade expertise and organizational respect

How are you preparing your team for AI integration while mitigating risk?

SHARE your expertise in the comments

If this helped you think more strategically about AI implementation, subscribe to both Jake's Handy AI for practical technical insights and Leadership in Change for grounded leadership strategy and its intersection with AI.

Besides publishing on this topic, I help companies with AI implementation and talent development.

Here are the top 5 challenges for AI adaptation that I come across:

🚩 Decentralized and Siloed Data: Information is highly fragmented and disorganized across various emails, spreadsheets, and unsynced databases. This lack of centralized, quality data is a fundamental barrier to AI.

🚩 Resistance to Change: A workplace culture where employees are accustomed to established methods creates significant inertia against adopting new technologies and processes.

🚩 Lack of Standardized Processes: An absence of standardized, repeatable, and documented business procedures creates inconsistent workflows, making it difficult to effectively integrate AI.

🚩 Talent and Skills Gaps: The workforce lacks sufficient technical skills and specialized AI expertise, which hinders the development, implementation, and adoption of AI systems.

🚩 High Costs and Unclear ROI: The significant investment required for AI, combined with the difficulty of clearly measuring its financial return, creates a major hurdle for securing funding and support.

I bet there's good opportunity in consultancies/agencies helping organizations implement AI changes that avoids all those issues. The agency could tackle everything and train people, recommend tools, and create systems to ensure min-maxed benefits and risks.